2024 Survey Paper on 3D LLMs

This survey paper by Ma et al (2024) explores various methods for combining LLMs with 3D data representations, such as point clouds and Neural Radiance Fields, to perform tasks like scene understanding, captioning, and navigation.

The authors highlight the potential benefits of LLMs in advancing spatial comprehension and interaction within AI systems, but also acknowledge that there is still a need for new approaches to fully leverage their capabilities.

Section 1, Introduction, explains how LLMs have evolved in natural language processing and the potential they hold in 3D environments, which are crucial for real-world applications like robotic navigation and autonomous systems.

Section 2, Background, provides necessary background on Vision-Language Models (VLMs) and Vision Foundation Models (VFMs) used for image and video tasks, as well as 3D representations (e.g., point clouds, voxel grids, neural fields), LLM architectures (encoder-decoder and decoder-only), and the emergent abilities (in-context learning, reasoning, and instruction-following) of LLMs.

Section 3, 3D Tasks and Metrics, outlines various tasks where 3D vision-language models are applied, including 3D captioning, 3D grounding, 3D question answering, 3D dialogue, and 3D navigation and manipulation.

Section 4, How LLMs Process 3D Information, delves into how LLMs can be adapted to handle 3D scene information, typically by mapping 3D features into the input space of LLMs through alignment modules.

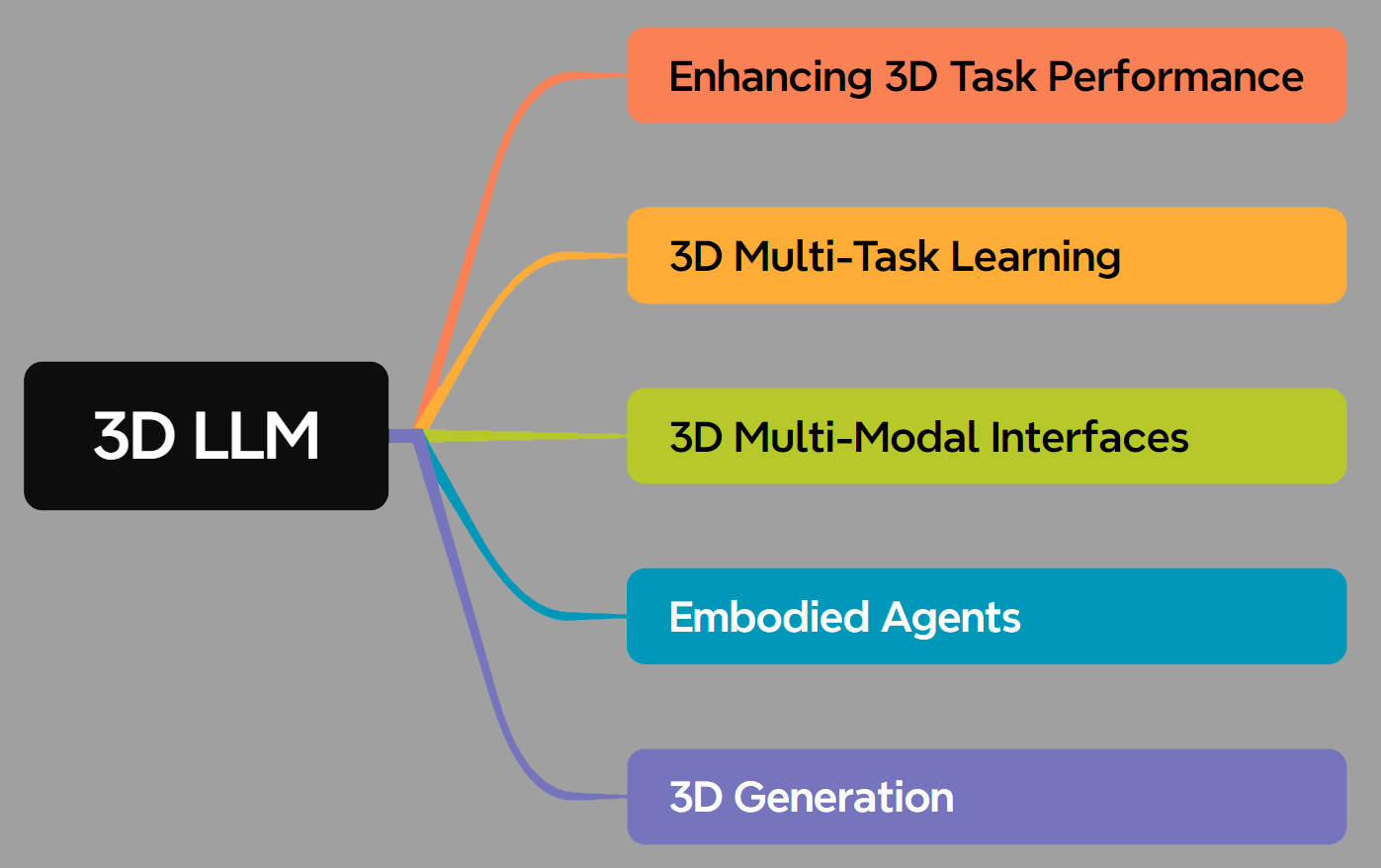

Section 5, Enhancing 3D Tasks with LLMs, describes methods that leverage LLMs to improve performance in 3D tasks by enhancing scene understanding, grounding, and reasoning about object relationships.

Section 6, 3D Multi-Task Learning, discusses the integration of LLMs for performing multiple 3D tasks simultaneously, such as grounding, captioning, and navigation.

Finally, Section 7, Future Directions, concludes by identifying challenges and potential research directions for advancing the field of 3D-LLMs, including improving scalability, data diversity, and real-world applicability.

Main Takeaways

The main takeaways from the article include the following:

-

3D-LLMs Integration is Promising but Complex: The integration of Large Language Models (LLMs) with 3D data offers great potential for advancing 3D scene understanding, interaction, and spatial reasoning. However, challenges remain in aligning 3D data representations (such as point clouds and Neural Radiance Fields) with LLMs, particularly in terms of scalability and computational efficiency.

-

Unique Capabilities of LLMs in 3D Tasks: LLMs bring valuable features like in-context learning, step-by-step reasoning, and open-vocabulary understanding to 3D tasks. This allows them to effectively generate natural language captions for 3D scenes, answer questions, and assist in navigation and manipulation tasks in physical spaces.

-

Multi-Modal Integration for Enhanced Spatial Reasoning: The paper highlights how combining 3D data with LLMs enables more sophisticated applications, such as spatial reasoning, planning, and navigation. LLMs can be used as multi-modal interfaces, providing a unified framework for tasks like 3D captioning, question-answering, and dialogue systems.

-

Challenges in 3D Data Representation and Alignment: A key challenge identified is the representation of 3D data. Various formats, such as point clouds, voxel grids, and neural fields, each have advantages and limitations in terms of storage efficiency, accuracy, and rendering quality. Aligning these representations with LLMs while maintaining computational efficiency remains a major hurdle.

-

Meta-Analysis of 3D Tasks: The survey covers a broad spectrum of 3D tasks, including object-level and scene-level captioning, dense captioning, grounding, 3D question-answering, and manipulation tasks. It also highlights the current evaluation metrics used in these tasks, emphasizing that while significant progress has been made, there’s still room for improvement, particularly in real-world applicability.

-

Potential for Embodied AI Systems: The integration of LLMs into embodied AI systems (e.g., robots) has the potential to revolutionize applications like autonomous navigation, robotic manipulation, and augmented reality by enabling these systems to interact more naturally with their environments using language.

-

Future Research Directions: The paper calls for further research in optimizing model architectures for 3D-LLM tasks, improving scalability, and enhancing real-time performance. It also suggests that more diverse datasets and evaluation protocols are needed to push the boundaries of what 3D-LLMs can achieve in real-world environments.

To conclude, the paper suggests that while 3D-LLMs offer a transformative potential across multiple domains, there are still significant technical challenges to overcome, particularly in aligning 3D data representations with LLM capabilities for practical applications.

Link: